Wave Field Synthesis with Real-time Control

- thesis (568K, pdf)

- 2-D OSC for WFSynth. A JAVA-based GUI for sending 2-D OSC messages to the WFSynth. Tests for multiple sources and a variety of typical WFS scenarios (point vs. plane wave stereo, cocktail effect demonstration, inner/outer sources, doppler effect, room reflections, etc.).

- Or download from the SourceForge page.

the WFSynth is a real-time wave field synthesis rendering engine with JACK interface. The project write-up, source code, and abstract are included on this page.

|

|

|

This project was accepted in completion of my masters at the University of California, Santa Barbara.

downloads

abstract

Wave field synthesis is an acoustic spatialization technique for

creating virtual environments. Taking advantage of the Huygens’

principle, wave fronts are simulated with a large array of speakers.

Inside a defined listening space, the WFS speaker array reproduces

incoming wave fronts emanating from an audio source at a virtual

location. Current WFS implementations require off line computation

which limits the real-time capabilities for spatialization. Further, no

allowances for speaker configurations extending into the third

dimension are given in traditional wave field synthesis.

A wave field synthesis system suitable for real-time applications and

capable of placing sources at the time of rendering is presented. The

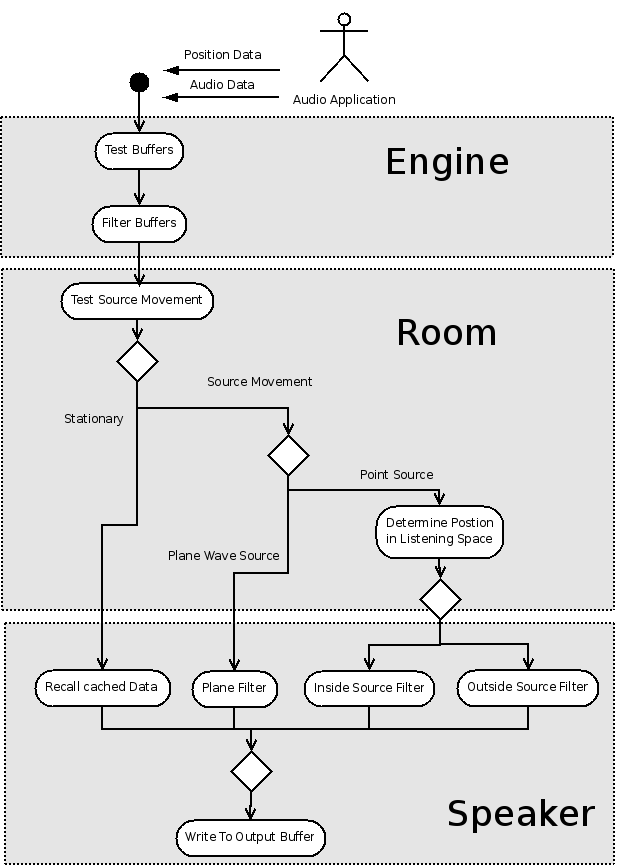

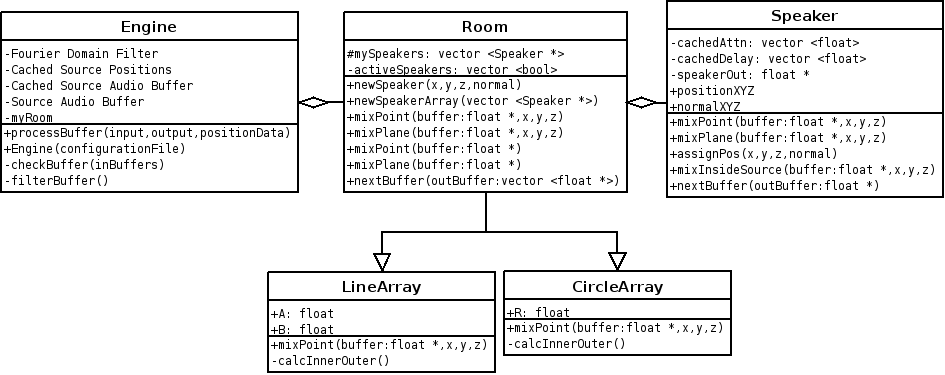

rendering process is broken into logical components for a fast and

extensible spatializer. A broad range of users and setup configurations

are considered in the design. The result is a model-based wave field

synthesis engine for real-time immersion applications.